|

Hello! My name is Yafu Li, and I am a third-year PhD student under joint training of Zhejiang University and Westlake University, under the supervision of Prof. Yue Zhang. I am currently conducting my internship at Tencent AI Lab, where I am mentored by Dr. Leyang Cui and Dr. Wei Bi.

Email / CV(En/中文) / Google Scholar / Semantic Scholar / Twitter / Github |

|

|

My research focuses on machine translation and natural language generation, with a recent focus on LLM-related topics.. You can find our recent work on detecting AI-generated texts at DeepfakeTextDetect. |

|

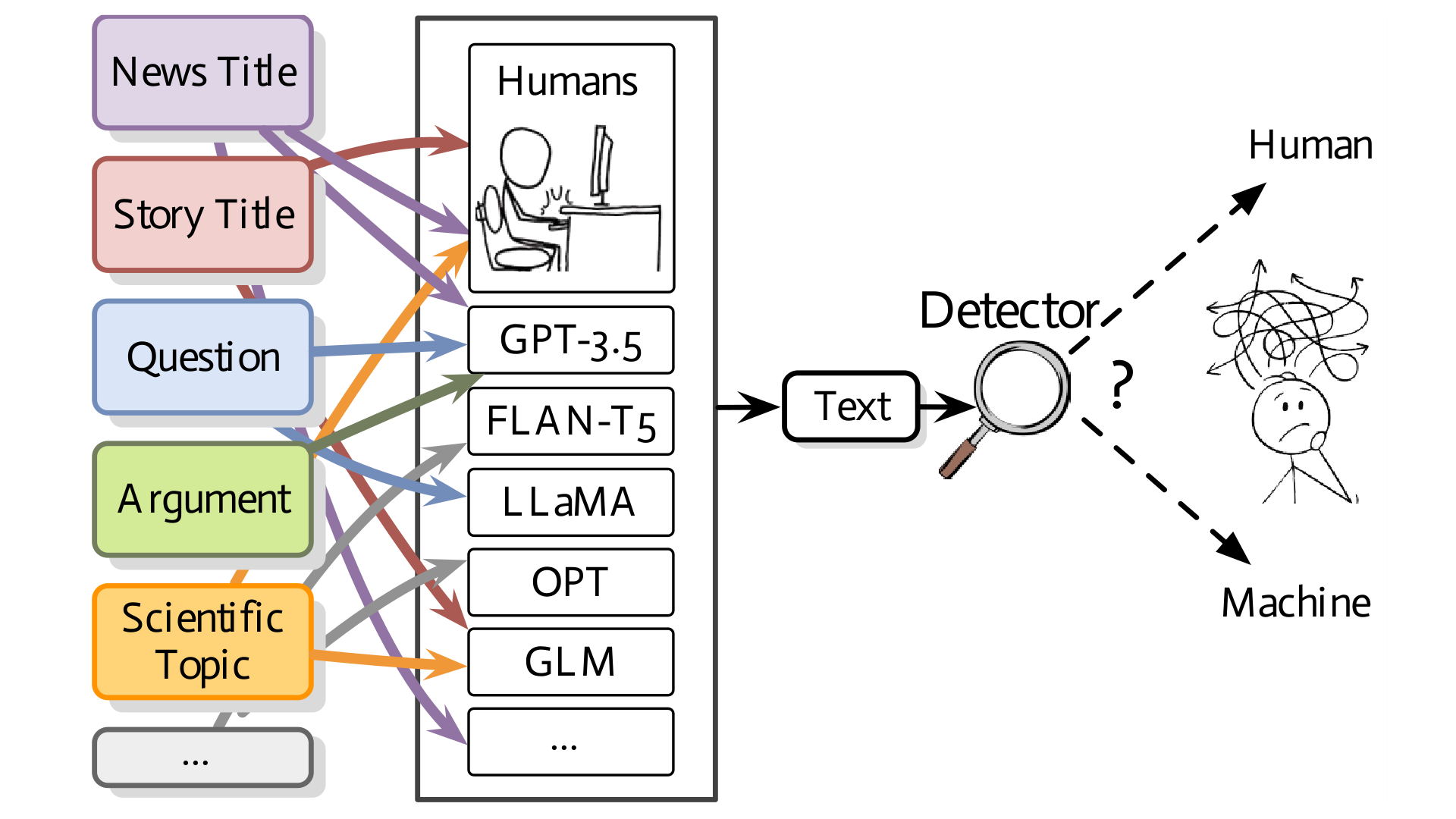

Yafu Li, Qintong Li, Leyang Cui, Wei Bi, Longyue Wang, Linyi Yang, Shuming Shi, Yue Zhang preprint project page / paper link We present a comprehensive benchmark dataset designed to assess the proficiency of deepfake detectors amidst real-world scenarios. |

|

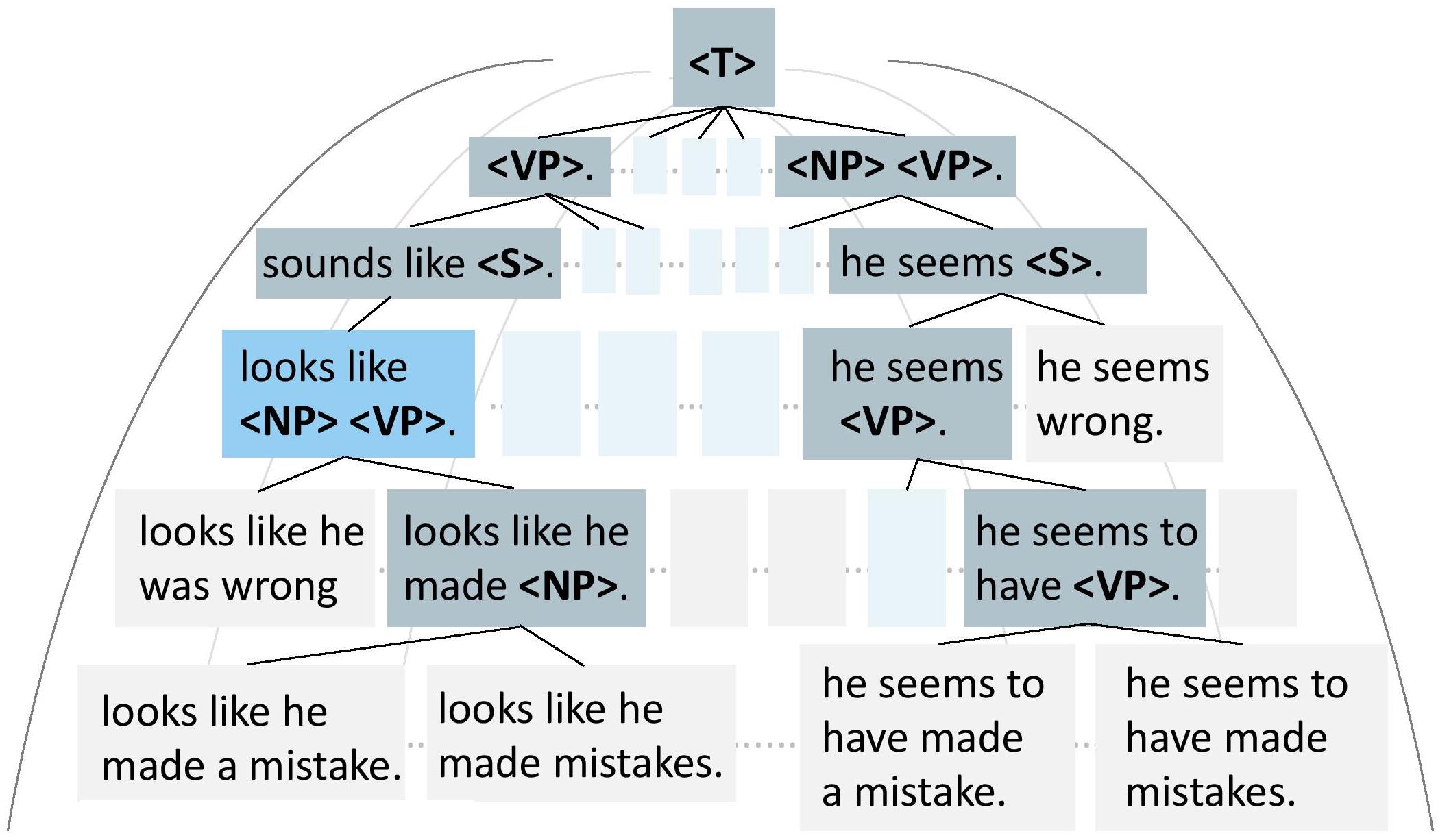

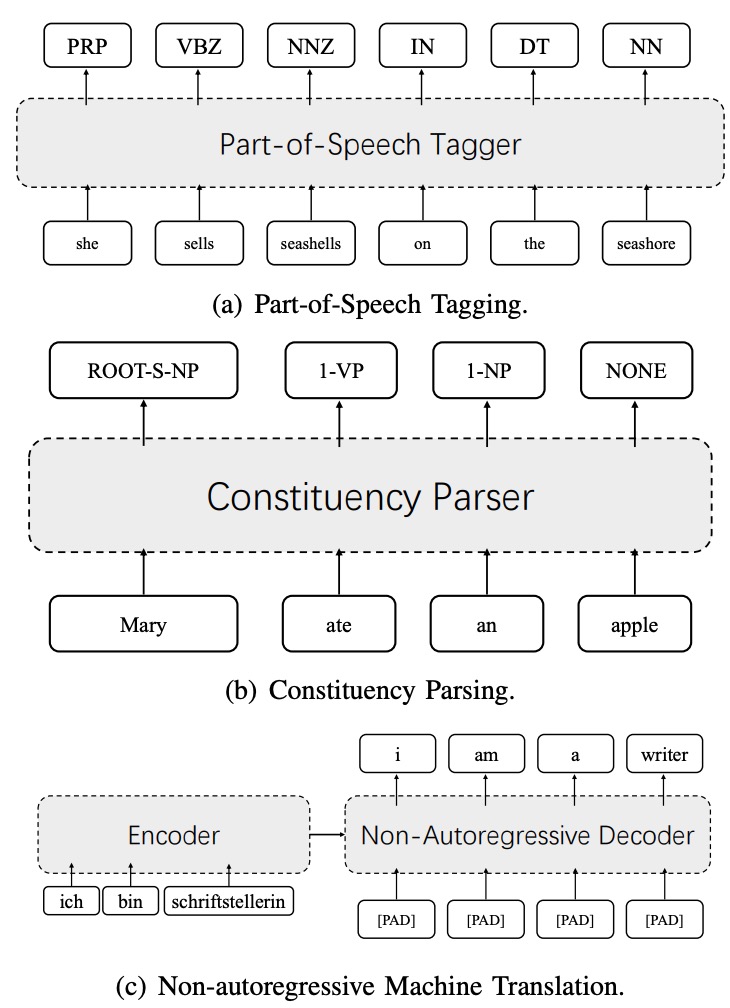

Yafu Li, Leyang Cui, Jianhao Yan, Yongjing Yin, Wei Bi, Shuming Shi, Yue Zhang ACL, 2023, Best Paper Nomination (1.6%) project page / paper link We propose a syntax-guided generation schema, which generates the sequence guided by a constituency parse tree in a top-down direction. |

|

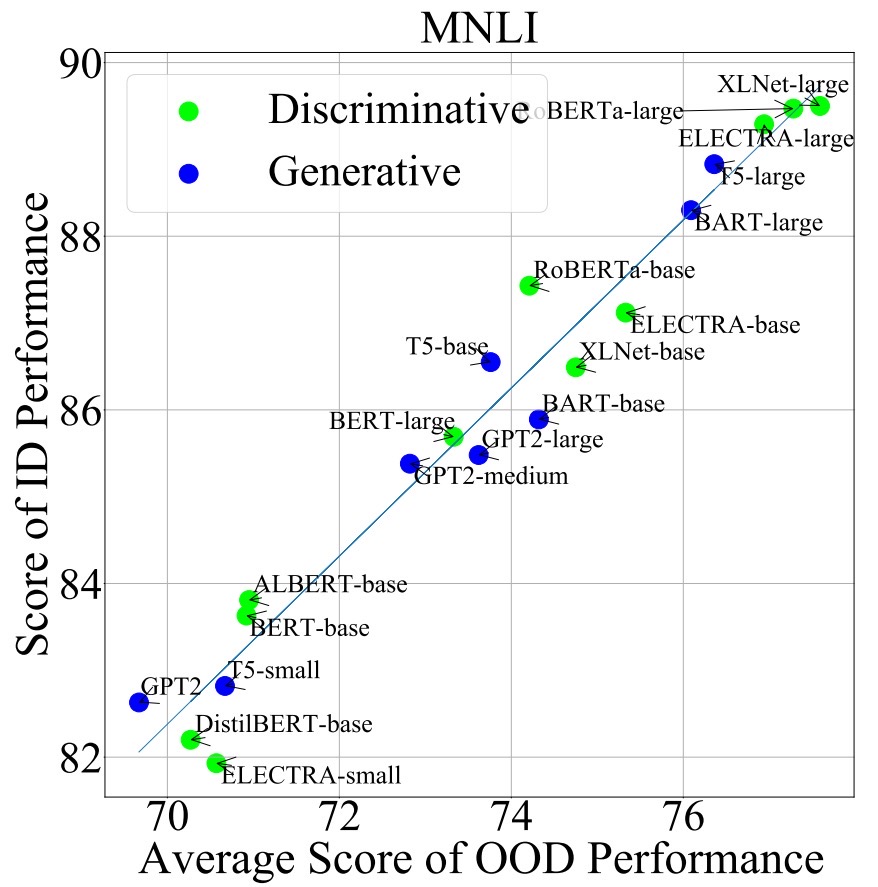

Linyi Yang, Shuibai Zhang, Libo Qin, Yafu Li, Yidong Wang, Hanmeng Liu, Jindong Wang, Xing Xie, Yue Zhang ACL Findings, 2023 project page / paper link We present the first attempt at creating a unified benchmark named GLUE-X for evaluating OOD robustness in NLP models |

|

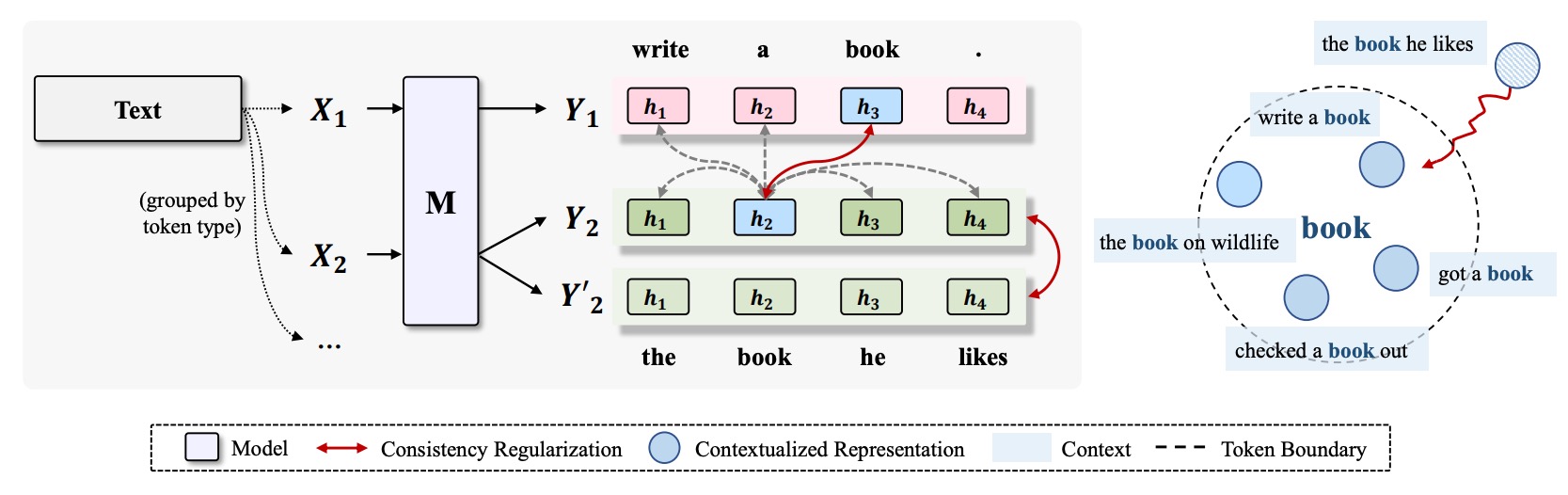

Yongjing Yin, Jiali Zeng, Yafu Li, Fandong Meng, Jie Zhou, Yue Zhang ACL, 2023 project page / paper link We propose to boost compositional generalization of neural models through consistency regularization training. |

|

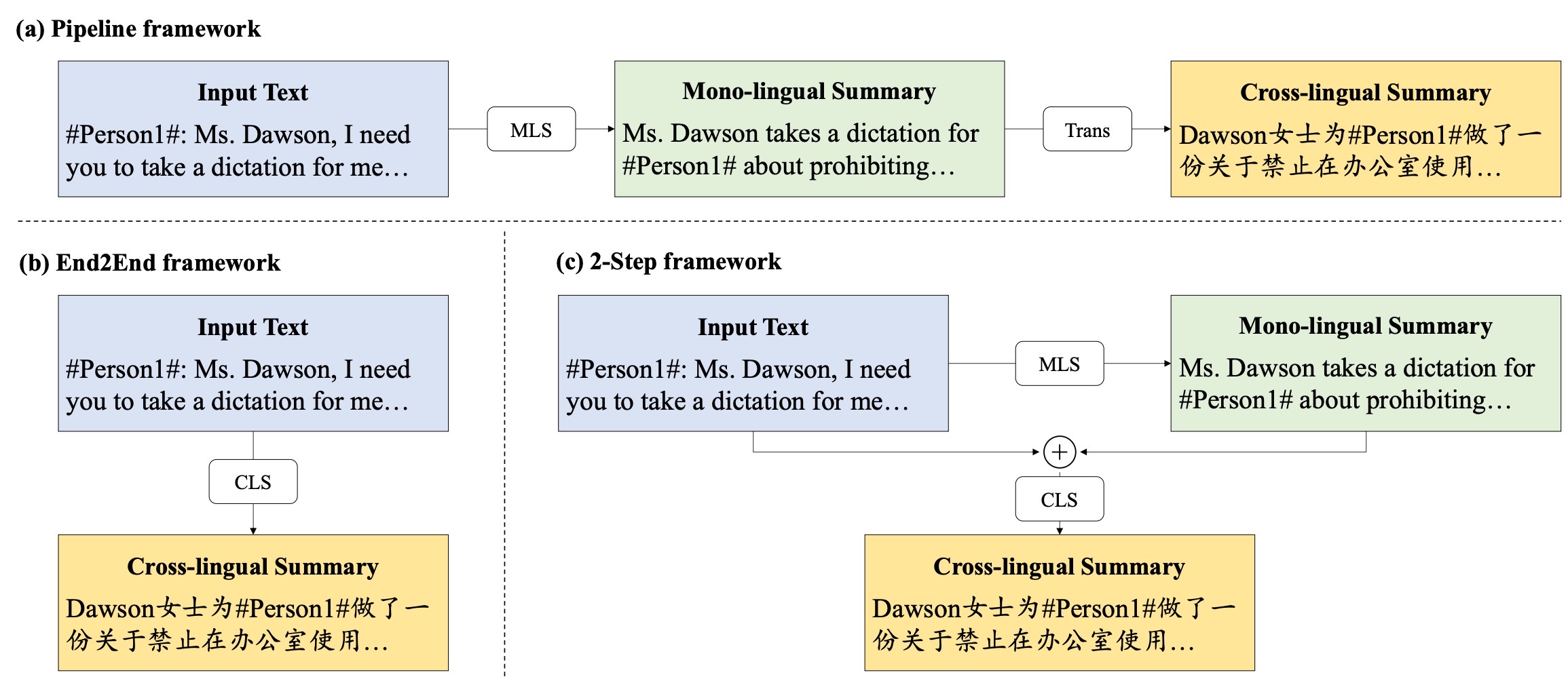

Yuleng Chen, Huajian Zhang, Yijie Zhou, Xuefeng Bai, Yueguan Wang, Ming Zhong, Jianhao Yan, Yafu Li, Judy Li, Michael Zhu, Yue Zhang ACL, 2023 project page / paper link We propose ConvSumX, a cross-lingual conversation summarization benchmark, through a new annotation schema that explicitly considers source input context. |

|

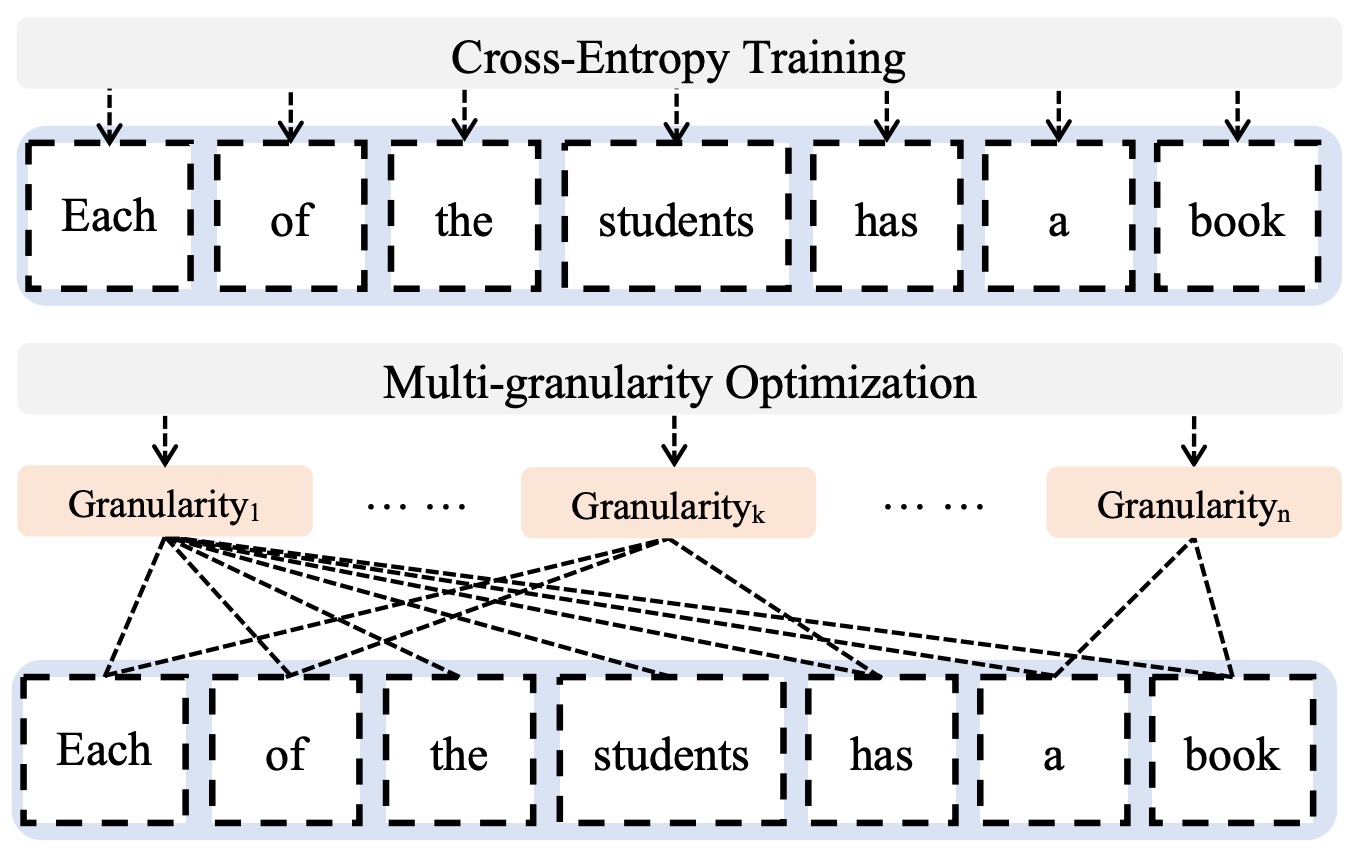

Yafu Li, Leyang Cui, Yongjing Yin, Yue Zhang EMNLP, 2022 project page / paper link We propose multi-granularity optimization for non-autoregressive translation, which collects model behaviors on translation segments of various granularities and integrates feedback for backpropagation. |

|

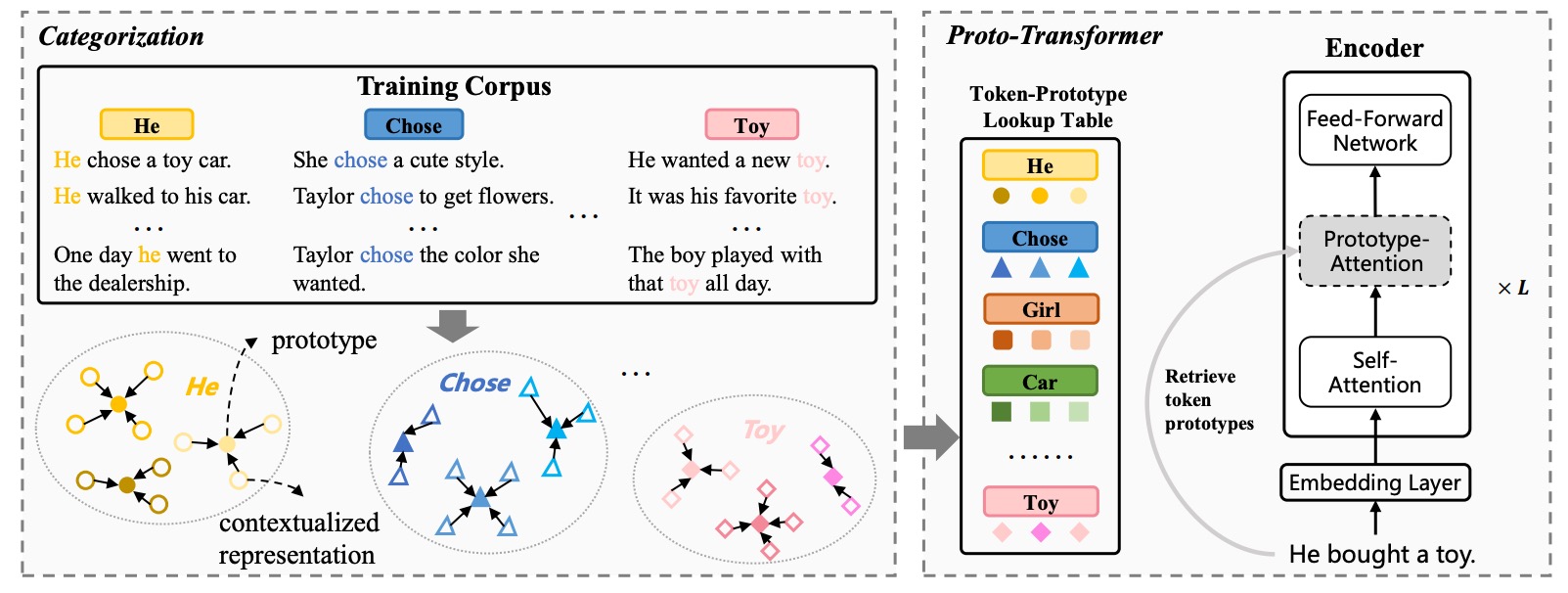

Yongjing Yin, Yafu Li, Fandong Meng, Jie Zhou, Yue Zhang COLING, 2022 paper link Learning of semantics of atoms and compositions can be improved by introducing categorization to the source contextualized representations. |

|

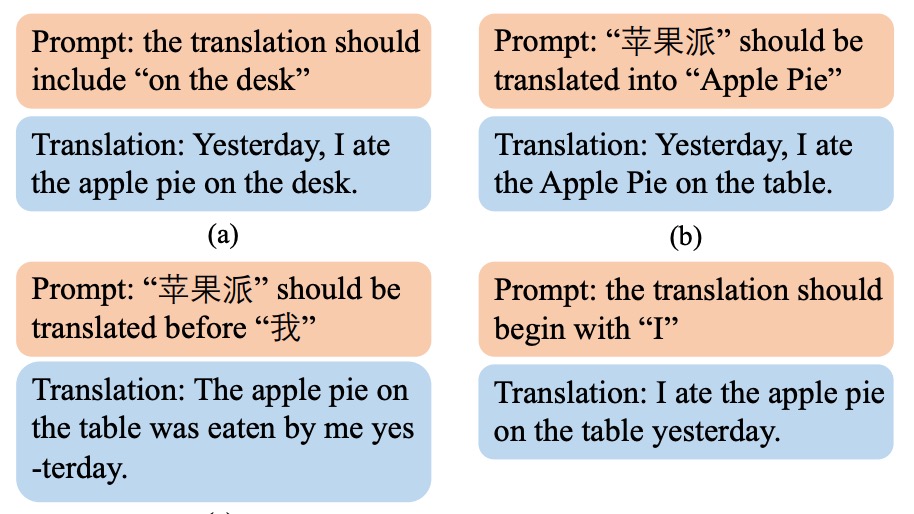

Yafu Li, Yongjing Yin, Jing Li, Yue Zhang ACL Fidings, 2022 project page / paper link Versatile prompts can be effectively integrated into one single translation model. |

|

Leyang Cui*, Yafu Li*, Yue Zhang TASLP, * equal contribution paper link We extend Label attention network (LAN) to general sequence labeling tasks including non-autoregressive translation. |

|

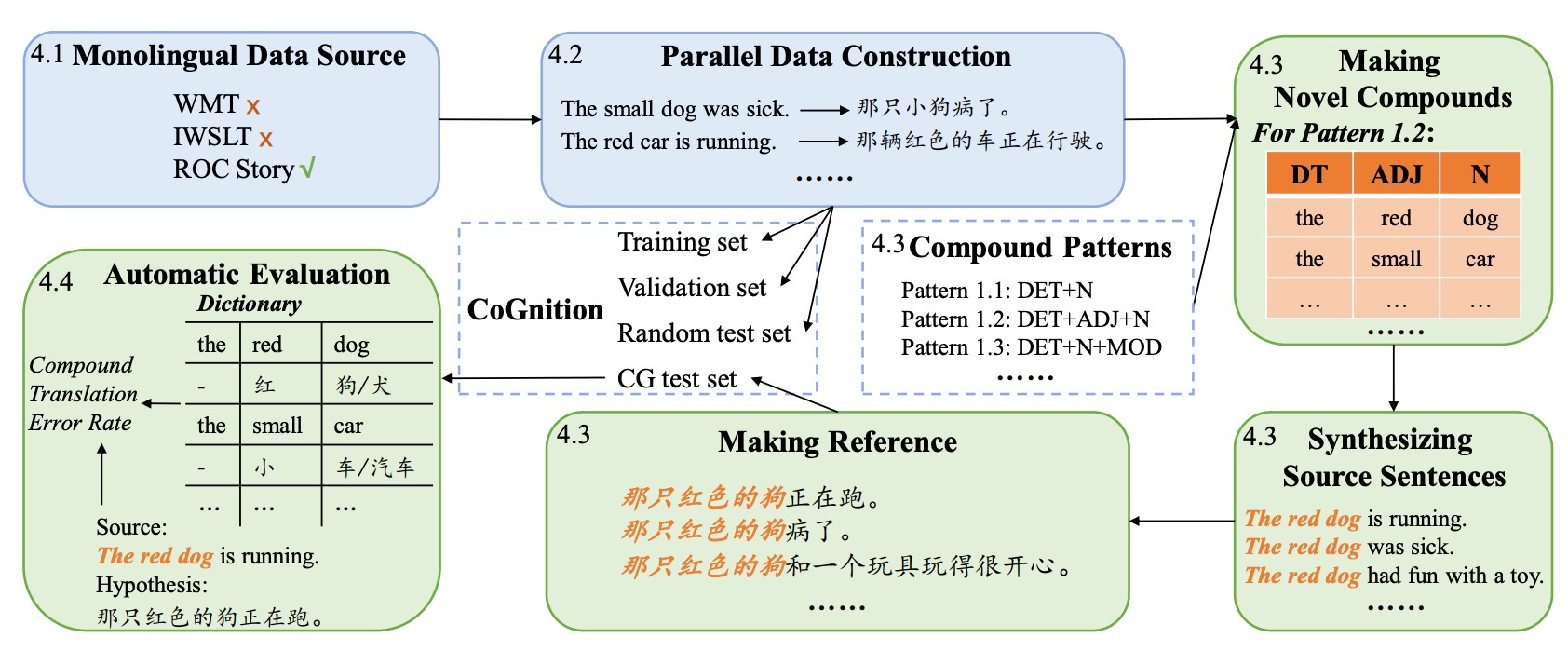

Yafu Li, Yongjing Yin, Yulong Chen, Yue Zhang ACL, 2021 project page / paper link Neural machine translation suffers poor compositionality. |

|

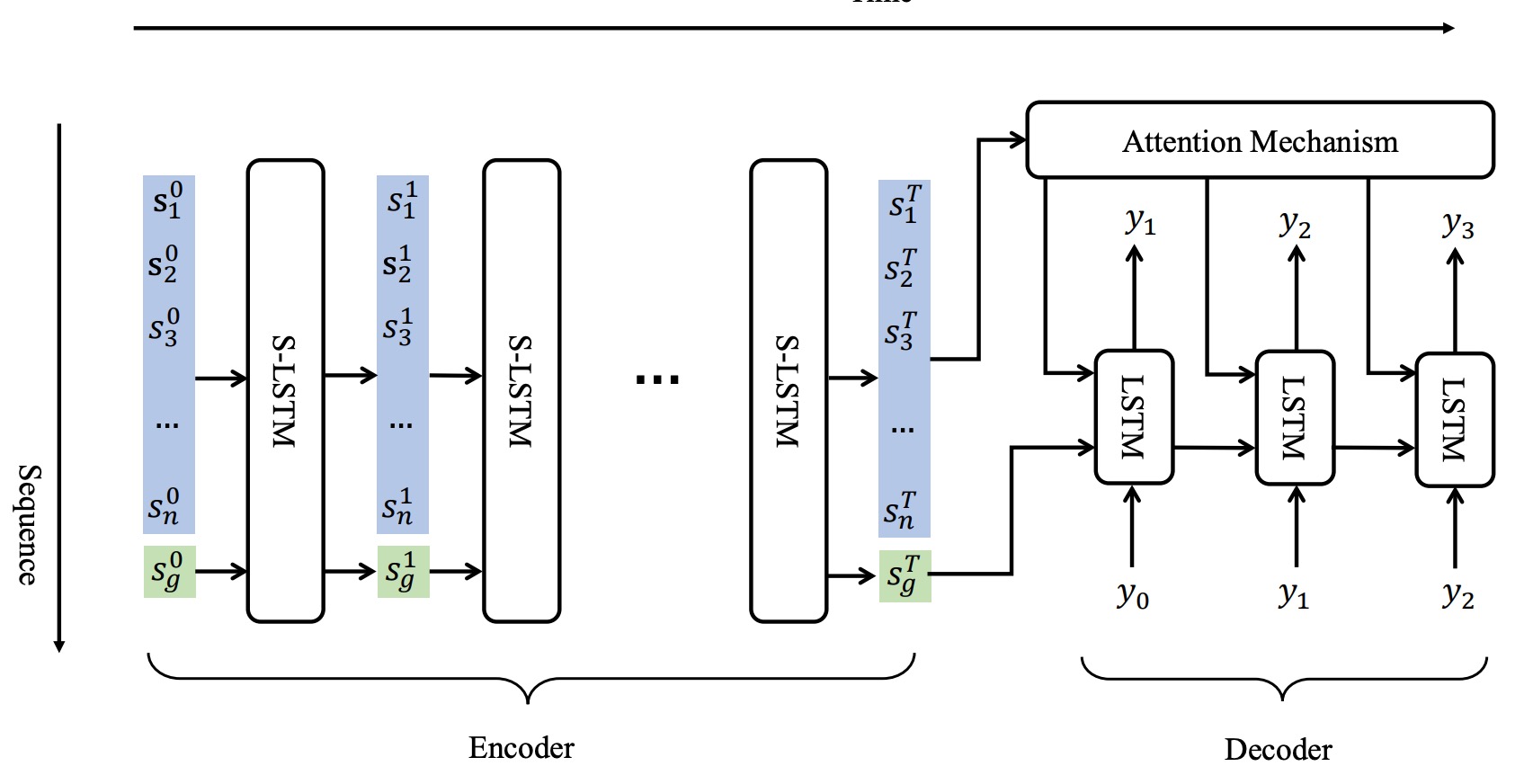

Xuefeng Bai, Yafu Li, Zhirui Zhang, Mingzhou Xu, Boxing Chen, Weihua Luo, Derek Wong, Yue Zhang NLPCC, 2021 paper link An alternative sequence-to-sequence model architecture obtains comparative performance as the Transformer. |

|

PhD candidate in Computer Science, Zhejiang University and Westlake University (2020.9-now). Master of Science in Artificial Intelligence, University of Edinburgh (2017.9-2018.11). Bachelor of Engineering in Electronic Information Engineering, Wuhan University (2013.9-2017.6). |

|

Research Intern at Tencent AI lab (2022.10-now). Algorithem Engineer at Noah Ark'slab, Huawei (2018.12-2020.6). Software Engineering Intern at VMware, Beijing (2016.9-2017.5). |

|

Reviewer: COLING 2022, EMNLP 2022, ACL 2023. |

|

Website's code is from Jon Barron. |